Data

(16th August update: we updated the training set and validation after quality control. Please use the latest dataset for training and validation.)

(Evaluation codes can be downloaded here)

Test data can be downloaded here. Please note that you are required to send us the segmentation map within 48 hours. The submission format is the same as the one submitted to the leaderboard. Please upload the segmentation map to Google Drive, WeTransfer or MEGA, and share the download link with us.

Training and test data will comprise 7 binary segmentation tasks in four different CT and MR data sets. Some of the data sets contain more than one binary segmentation (sub-)task, e.g., different sub-structures of tumor or anatomy need to be segmented.

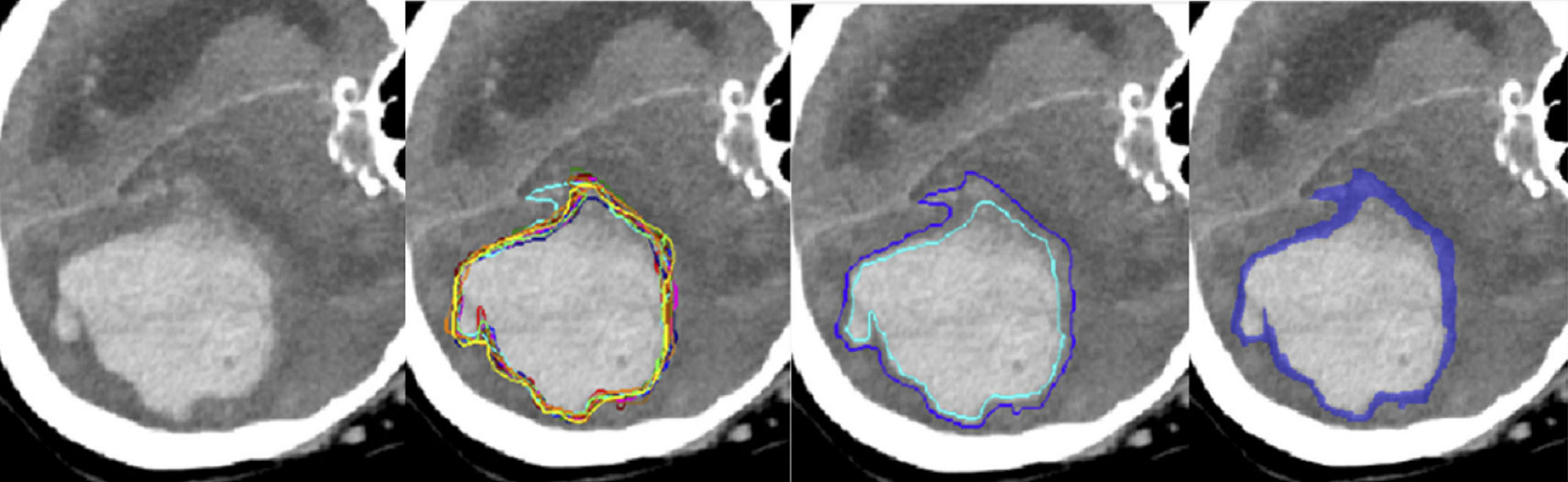

All data sets have about 50 to 100 cases featuring one selected 2D slice each. Each structure of interest is segmented between three and seven times by different experts, and individual segmentations are made available. The task is to delineate structures in a given slice, and to match the distribution – or spread – of the expert's annotations well.

The data is split accordingly in four different image sets, and for each case in those sets, binary labels are given for each segmentation task.

- The following data and tasks are available:

- Prostate segmentation (MRI): 55 cases, two segmentation tasks, six annotations (except three subjects has only 5 annotations due to bad annotation quality);

- Brain growth segmentation (MRI): 39 cases, one segmentation task, seven annotations;

- Brain tumor segmentation (multimodal MRI): 32 cases, three segmentations tasks, three annotations; - - Kidney segmentation (CT): 24 cases, one segmentation task, three annotations;

- - Pancreas segmentation (CT): 38 patients, 76 cases, (each patient went through two scans at two time points), one segmentation task, two annotations;

- - Pancreatic lesion segmentation (CT): 21 patients, 42 scans (each patient went through two scans at two time points), one segmentation task, three annotations;

Data is available as .nii files with 2D slices or 3D volumes. For “Brain tumor” the file contains slices of all four MR modalities.

Metric and algorithmic comparison

Each participant will have to segment the given binary structures and predict the distribution of the experts' labels by returning one mask with continuous values in between 0 and 1 that is supposed to reproduce the cumulated segmentations of the experts.

Predictions and continuous ground truth labels are compared by thresholding the continuous labels at predefined thresholds and calculating the volumetric overlap of the resulting binary volumes using Dice score (the continuous ground truth labels are obtained by averaging multiple experts annotations). To this end, ground truth and prediction are binarized at ten probability levels (that are 0.1, 0.2, ..., 0.8, 0.9). Dice scores for all thresholds will be averaged.

Dice scores will be averaged across all tasks and all image data sets. The participant performing best according to this average will be named the "winner" of the challenge. Dice scores have many shortcomings, in particular in the novel application domain of uncertainty-aware image quantification. To this end, additional metrics will be calculated and reported for the final analysis, and alternative ranking schemes will be evaluated and presented in the final challenge summary paper.

Participation only in a single task is allowed (just submit the results for the task of your choice), but the winner is determined based on the average score over all the tasks.

It is not mandatory to have a single algorithm for all 7 tasks.

Results of all participants with viable solutions will be reported in a summary paper that will be made available on arxiv immediately after MICCAI conference.

Participation

Participants register via "Join" button.

Training data with annotations are available from [here] (password: qubic2021), validation set can be downloaded from [here] (password: qubic2021) and test data will be made available to registered participants in early September. The training data is featuring a subset of cases (labeled as ‘evaluation’ set) that can be used for leaderboard evaluation in the online submission system. This data has labels available and is to be used for training the final algorithm as well.

For evaluation, test sets will be made available to the participants at a time point, and they have to upload segmentations within 48 hours. For each task, the number of test cases corresponds to about 20% of the number of training cases.

Submission data structure

As test data, participants will receive images without annotations for all tasks. Participants are encouraged to submit segmentations (i.e. probability maps) for all 7 tasks (3 for brain tumor, 2 for prostate, 1 for brain growth, 1 for the kidney dataset, two for pancreas and its lesion). The submission folder should be zipped and follow the structure and naming convention of the training data folders. E.g. the tree of the submission folder (results.zip) will be like:

Results --- brain-tumor --- cases*

| task01.nii.gz

| task02.nii.gz

| task03.nii.gz

brain-growth --- cases*

| task01.nii.gz

prostate --- cases*

| task01.nii.gz

| task02.nii.gz

kidney --- cases*

| task01.nii.gz

pancreas --- cases*

| task01.nii.gz

pancreatic lesion --- cases*

| task01.nii.gz

Timeline

- June: training data available, registration is open

- Mid July (3rd August): validation data available, the leaderboard is open (on the "leaderboard" the predictions are compared with validation ground truth data, so the upload serves only verification of the submission format. When we release the test data the submission format will be identical.)

- 13th August (20th August): submission deadline for optional LNCS proceedings. Please follow the submission guideline of the BrainLes workshop.

- 10 am CET, 6th September: test data will be made available to the website, segmentations need to be done within 48 hours. The segmentation should be uploaded and sent to the organizer. Please contact organizers regarding the details.

- 16th September: submission deadline for brief method description (two to three pages in LNCS format).

- October, 1st: MICCAI workshop; results will be announced

- after MICCAI: Arxiv paper will be uploaded

The best prizes ever

All participants with viable submissions will become co-authors in the challenge summary paper that is to be released on Arxiv directly after the 2021 challenge and will be submitted to a community journal, such as Medical Image Analysis or IEEE Transactions of Medical Imaging.

Contacts

If you have any questions, please contact qubiq.miccai@gmail.com or hongwei.li@tum.de